Being a massive global social network is not an easy task that should be taken lightly. As Elon Musk has been shouting a lot lately, social networks have to be careful about the content they take down as, due to the power and reach they have, it could be seen as an attack on free speech. They then also have to consider what can happen when people abuse that right and incite violence or even worse, broadcast their own acts of hate. This is a problem that Facebook has run against recently according to a quarterly report from the social network’s parent company, Meta.

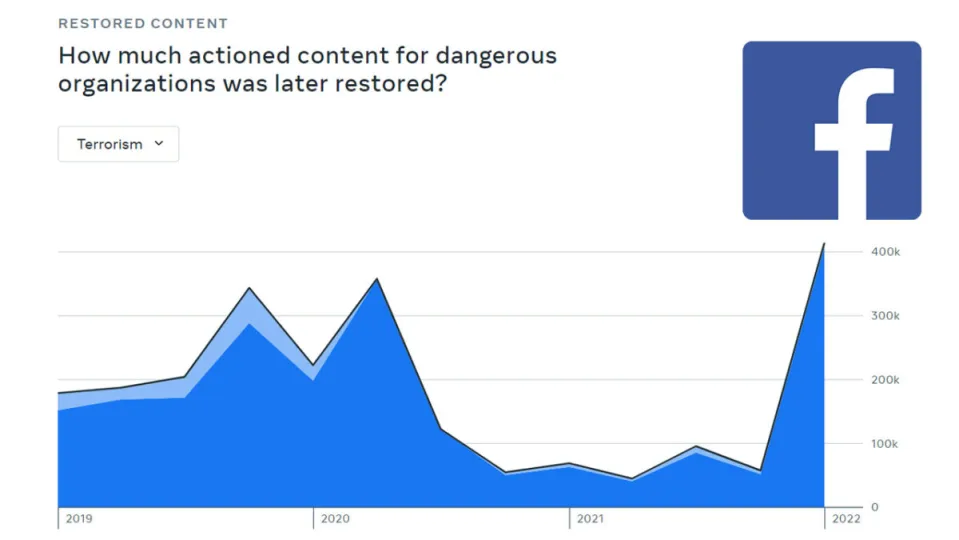

Meta has announced that a bug in the Facebook code has resulted in the social network having to restore over 400,000 pieces of content that were mistakenly flagged as terrorism-related between January and March of 2022. This is an increase of over 350,000 from the previous quarter. The company also announced that as well as restoring the pulled content, it has fixed the bug that caused the issue in the first place.

Facebook has long been mired in controversy surrounding the type of content that has historically been shared across its network. Many people believe that it is that very controversy that has seen the new parent company, Meta set up in the first place in a bid to wipe clean Facebook’s slate and deflect attention. There may be something in this too as although Meta is pumping billions of dollars into the development of its VR metaverse, which is the public-facing reason for the rebrand there is no doubt that it is still years from catching on properly.

All this latest episode does is highlight that Facebook still doesn’t know how to deal with hate speech on its network in a way that still facilitates free speech between its users. This is a dilemma that Elon Musk would do well to pay attention to, as he really might be biting off more than he can chew with his planned acquisition of Twitter.

Meta is still testing new AI technology that could moderate Facebook content, but there are still bugs that need to be worked out before they can help with the job.

Unfortunately, all of this is going on in an environment that is seeing increasing levels of hate and violence broadcast across mainstream platforms. The recent attack in Buffalo was live-streamed on Twitch with videos of the stream also shared on Twitter and Facebook.

If all this puts you off Facebook, you may want to check out our guide to deleting your Facebook account. If not, read our how to learn what Facebook knows about you guide.

Image via: Meta